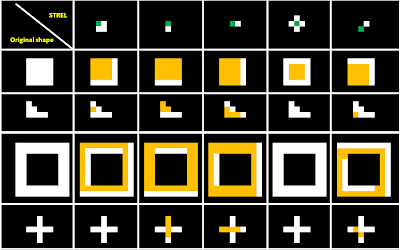

In this activity, we will be performing image compression done by most image compression algorithms when saving to formats like JPEG etc.

An image of size MxM may be represented as a point in an M^2 dimensional space. However, representing the said image as a point in such a highly dimensional space would be computationally expensive. That's where PCA(Principal Components Analysis) comes in. With the use of PCA, we would be able to find a new coordinate system(having less dimensions) with basis(eigenvectors) for representing the image. The pca() function in scilab outputs the eigenvectors as well as their corresponding eigenvalues.

Most image compression algorithms uses PCA for reducing information storage. This is done by reconstructing the image using only the eigenvectors having the most significant eigenvalues.

Given the image:

100%:

98%:

95%:

90%:

As illustrated, as lesser eigenvectors are used to represent the image, the more pixelated in gets. This is because as you decrese the number of eigenvectors, less information are stored per pixel.

I would like to give myself a grade of 9/10 for this activity. I was able to get the results for compressing an image using PCA. Less one point for not blogging it clearly.

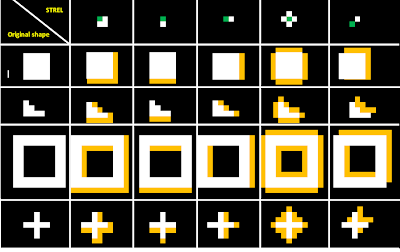

Most image compression algorithms uses PCA for reducing information storage. This is done by reconstructing the image using only the eigenvectors having the most significant eigenvalues.

Given the image:

Figure 1: Image to be compressed

Using PCA we would be able to compress this image using 100%, 98%, 95% and 90% of the total eigenvectors.100%:

98%:

95%:

90%:

As illustrated, as lesser eigenvectors are used to represent the image, the more pixelated in gets. This is because as you decrese the number of eigenvectors, less information are stored per pixel.

I would like to give myself a grade of 9/10 for this activity. I was able to get the results for compressing an image using PCA. Less one point for not blogging it clearly.